[ad_1]

Global Accessibility Awareness Day is this Thursday (May 19th) and Apple, like many other companies, is announcing assistive updates in honor of the occasion. The company is bringing new features across iPhone, iPad, Mac and Apple Watch, and the most intriguing of the lot is systemwide Live Captions.

Similar to Google’s implementation on Android, Apple’s Live Captions will transcribe audio playing on your iPhone, iPad or Mac in real time, displaying subtitles onscreen. It will also caption sound around you, so you can use it to follow along conversations in the real world . You’ll be able to adjust the size and position of the caption box, and also choose different font sizes for the words. The transcription is generated on-device, too. But unlike on Android, Live Captions on FaceTime calls will also clearly distinguish between speakers, using icons and names for attribution of what’s being said. Plus, those using Macs will be able to type a response and have it spoken aloud in real time for others in the conversation. Live Captions will be available as a beta in English for those in the US and Canada.

Apple is also updating its existing sound recognition toolwhich lets iPhones continuously listen out for noises like alarms, sirens, doorbells or crying babies. With a coming update, users will be able to train their iPhones or iPads to listen for custom sounds, like your washing machine’s “I’m done” song or your pet duck quacking, perhaps. A new feature called Siri Pause Time will also let you extend the assistant’s wait time when you’re responding or asking for something, so you can take your time to finish saying what you need.

Apple

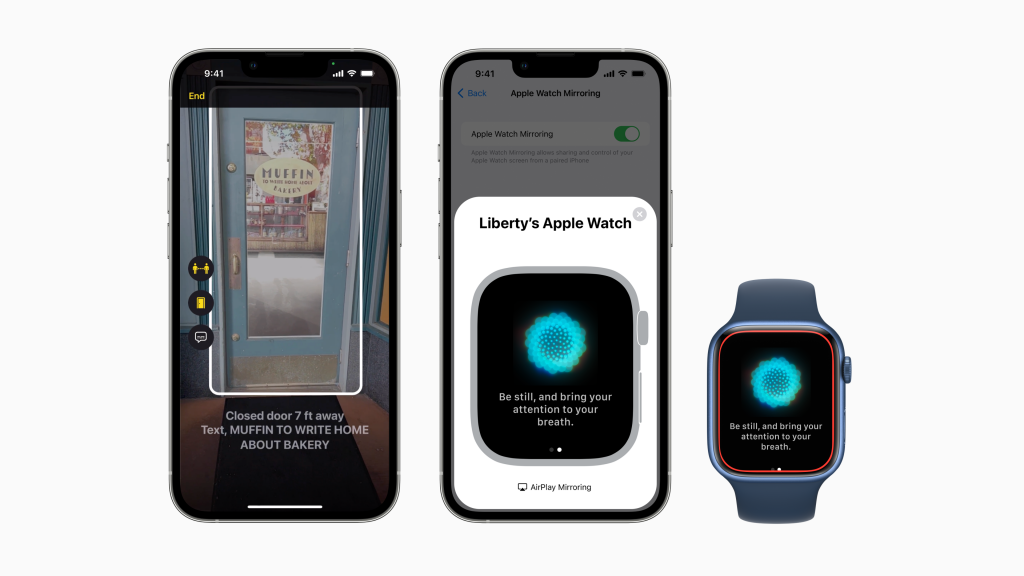

The company is updating its Magnifier app that helps people who are visually impaired better interact with people and objects around them. Expanding on a previous People Detection tool that told users how far away others around them were, Apple is adding a new Door Detection feature. This will use the iPhone’s LiDAR and camera to not only locate and identify doors, but will also read out text or symbols on display, like hours of operation and signs depicting restrooms or accessible entrances. In addition, it will describe the handles, whether it requires a push, pull or turn of a knob, as well as the door’s color, shape, material and whether it’s closed or open. Together, People and Door Detection will be part of the new Detection mode in Magnifier.

Updates are also coming to Apple Watch. Last year, the company introduced Assistive Touch, which allowed people to interact with the wearable without touching the screen. The Watch would sense if the hand that it’s on was making a fist or if the wearer was touching their index finger and thumb together for a “pinch” action. With an upcoming software update, it should be faster and easier to enable Quick Actions in assistive touch, which would then let you use gestures like double pinching to answer or end calls, take photos, start a workout or pause media playback.

But Assistive Touch isn’t a method that everyone can use. For those with physical or motor disabilities that preclude them from using hand gestures altogether, the company is bringing a form of voice and switch control to its smartwatch. The feature is called Apple Watch Mirroring, and uses hardware and software including AirPlay to carry over a user’s preset voice or switch control preferences from their iPhones, for example, to the wearable. This would allow them to use their head-tracking, sound actions and Made For iPhone switches to interact with their Apple Watch.

Apple is adding more customization options to the Books app, letting users apply new themes and tweak line heights, word and character spacings and more. Its screen reader VoiceOver will also soon be available in more than 20 new languages and locales, including Bengali, Bulgarian , Catalan, Ukrainian and Vietnamese. Dozens of new voices will be added, too, as is a spelling mode for voice control that allows you to dictate custom spellings using letter-by-letter input

Finally, the company is launching a new feature called Buddy Controller that will let people use two controllers to drive a single player, which would be helpful for users with disabilities who want to partner up with their care providers. Buddy Controller will work with supported game controllers for iPhone, iPad, Mac and Apple TV. There are plenty more updates coming throughout the Apple ecosystem, including on-demand American Sign Language interpreters expanding to Apple Store and Support in Canada as well as a new guide in Maps, curated playlists in Apple TV and Music and the addition of the Accessibility Assistant to the Shortcuts app on Mac and Watch. The features previewed today will be available later this year.

All recommended products by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission.

[ad_2]

Source link